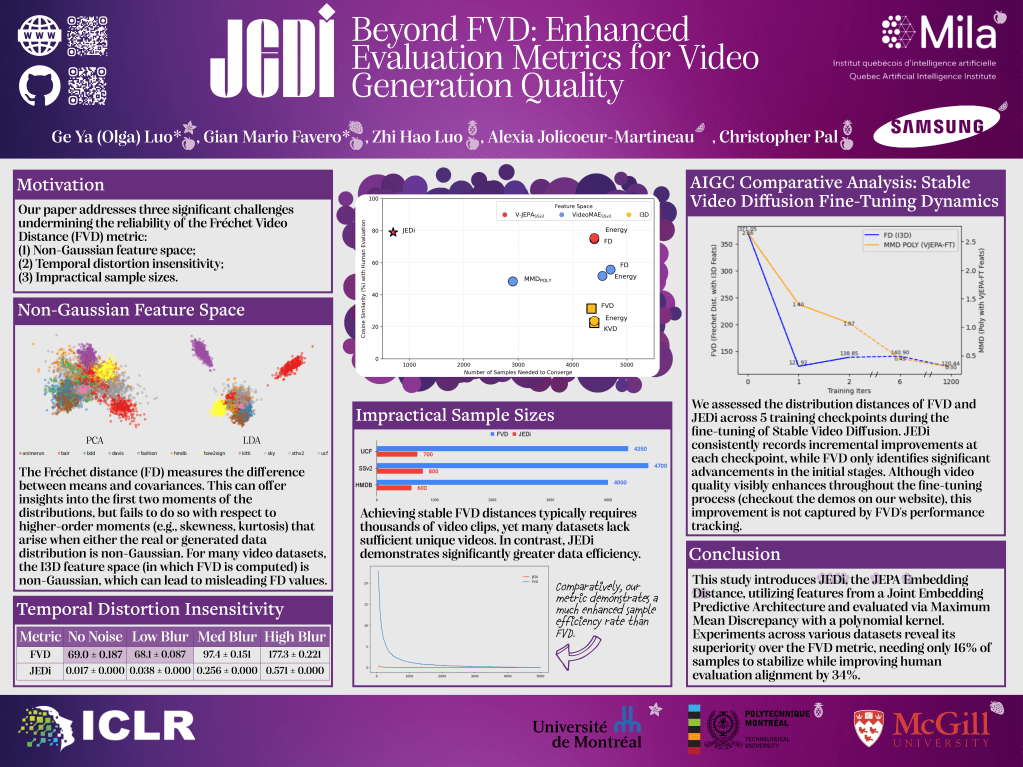

TL;DR: In this work, we proposed a new evaluation metric — JEDi — for assessing the quality of generated videos, overcoming many limitations of widely-used methods, such as Fréchet Video Distance(FVD) and LPIPs. JEDi surpasses FVD by:

- Relaxing Gaussian assumptions: No longer constrained by multivariate Gaussian distributional assumptions, enabling broader applicability.

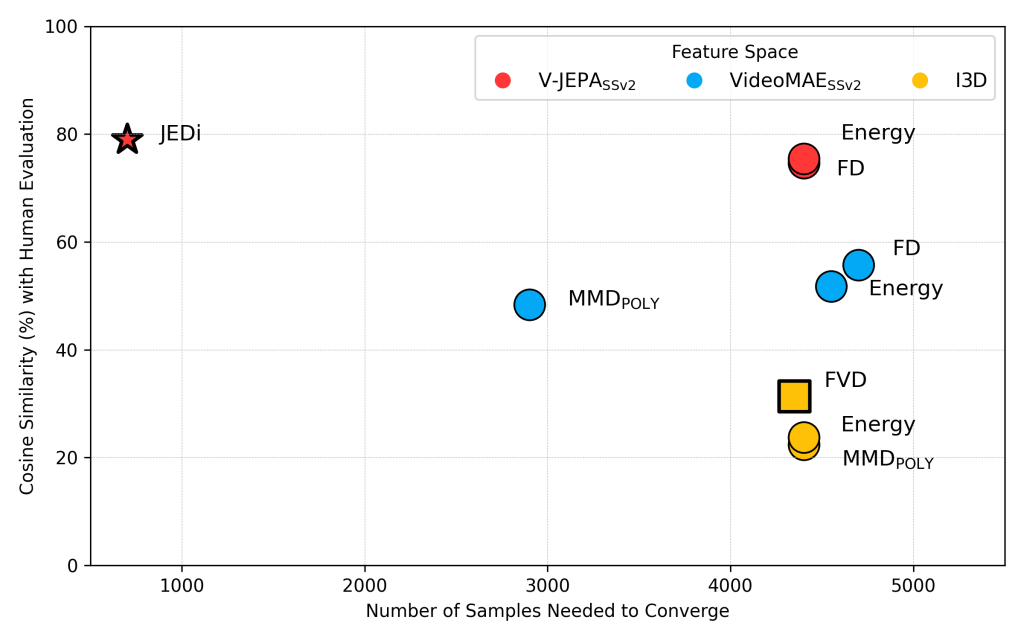

- Enhancing sample efficiency: Requiring fewer samples to achieve reliable evaluations, reducing computational costs.

- Aligning with human perception: Demonstrating superior correlation with human visual assessment, providing a more accurate measure of video generation quality.

Key Takeaways: Best Practices for Distributional Evaluation

- DO NOT average distributional metric distances in mini-batches. Distributional metrics necessitate comprehensive sample sizes to converge and precisely capture the underlying distribution. Recognizing that infinite data is theoretically required for accurate distance evaluation, it is recommended to compute metric distances on the entire dataset, ensuring reliable and robust assessments.

- Extract features in mini-batches. We need a lot of samples to compute reliable distributional evaluation. Due to GPU memory constraints, processing entire datasets at once may not be feasible. To address this, features can be extracted in mini-batches, saved, and then utilized in their entirety during evaluation.

- Assert consistence video settings. When comparing two distributions, verify identical video settings, such as frame resolution, frame rate, and video length, for both distributions. Also, confirm consistent settings when comparing with other studies.

- Report the ground-truth baseline. Report the distribution distance between the ground truth training and testing sets. This provides a reference point for evaluating generated video quality against the empirical dataset distance.

Abstract: The Fréchet Video Distance (FVD) is a widely adopted metric for evaluating video generation distribution quality. However, its effectiveness relies on critical assumptions. Our analysis reveals three significant limitations: (1) the non-Gaussianity of the Inflated 3D Convnet (I3D) feature space; (2) the insensitivity of I3D features to temporal distortions; (3) the impractical sample sizes required for reliable estimation. These findings undermine FVD’s reliability and show that FVD falls short as a standalone metric for video generation evaluation. After extensive analysis of a wide range of metrics and backbone architectures, we propose JEDi, the JEPA Embedding Distance, based on features derived from a Joint Embedding Predictive Architecture, measured using Maximum Mean Discrepancy with polynomial kernel. Our experiments on multiple open-source datasets show clear evidence that it is a superior alternative to the widely used FVD metric, requiring only 16% of the samples to reach its steady value, while increasing alignment with human evaluation by 34%, on average.